Rate Limiting

A difficult topic in networking to fully understand is rate limiting or policing traffic. When most people hear, “your speed is 50Mbps” they assume the router is limiting the data and sending at 50Mbps. This is only half true. Yes, 50 megabits worth of data may pass in one second but that is not the true “speed” at which the router is sending the data. A router/device must always send at line rate. What the heck does that mean? Well, on a gigabit interface, it would be 1gbps. Speed/duplex aside, the router cannot slow down the bits on the wire; it just wouldn’t be practical to have one device slow its clockrate forcing all other devices on the collision domain to do the same. Not to mention how you would police some traffic but not all leaving an interface.

So, how does a router achieve the goal of limiting a data flow to 50Mbps? It starts sending the data, at line rate, until the burst size is reached. The burst size is that other variable needed when setting up a limiter. I’ve seen all sorts of different burst sizes throughout my career and could probably write an entire article about it. Technically, it just needs to be larger than the MTU of the interface but definitely not a good idea having it that low. While I’m sure there have been many debates about what is considered the “right” size for a given environment and there are all sorts of variables to consider, a good starting point is the interface speed x burst duration / 8. At least, that’s what Juniper recommends. They say to start with 5ms for burst duration. For low latency environments, you might not have to worry too much about the size. In high latency with high tcp window sizes, you might run into trouble with a burst size set too low.

Let’s say we configure a burst size of 1MB our router sends 1MB (assuming there is 1MB worth of data waiting to be sent) at 1gbps then stops sending until its “bucket” starts to fill again. Perhaps you’ve hear of the token bucket?

The Token Bucket

Picture this scenario. You have a kiddie pool that needs filling and no garden hose. You do have a bucket, however, and could use the shoulder exercise. In this analogy, the water is constant YouTube data, the spigot is the committed rate (CIR), the bucket size is the burst size, the interface speed is how quickly the water (data) flows out of the overturned bucket (unless you want to be pedantic, doesn’t vary) and the pool is your browser. Let’s also assume there’s no walking involved, as if the pool is next to the spigot and you are turning at the waist. While overturning the bucket is pretty fast, the refill at the spigot takes time and you can think of that refill as the rate the router must wait before sending more traffic. Increasing the CIR, or opening up that spigot value more, will result in the router waiting less time to send another burst.

You can see here that increasing the bucket size may not fill the pool any faster (maybe just burn your shoulders more) but it may impact the traffic in ways we won’t get into too much here. Just remember that the router is sending at line rate for duration of the burst. Where you could run into problems with a large burst size is when there is a second, or third, or forth flow also trying to get out the interface while this burst of traffic is happening. Now, an interface scheduler may have to take over and start shaping the traffic. You’ll start seeing delays and queue drops.

What may get lost in the analogy is that the bucket is refilling at the same time it is emptying. Also, the router doesn’t necessarily wait for the bucket to completely fill before sending again so each successive burst of traffic may not be the full burst size. In fact, if the data rate towards the policer is high enough, you may never see a burst again and the output would appear to be a constant, albeit lower, stream of packets.

So, how can we picture packets being dropped? Let me drop another analogy on you. If you’re like me you have to do everything yourself, including changing your car oil. Now, here the data is your carton of fresh 5w-30, the bucket or burst size is the funnel, the interface speed is gravity or the rate the oil will fall after leaving the funnel and the CIR is rate the oil is slowed by the taper of the funnel and the reduced opening. Oil being much more viscous than water, it’s easy to see the packets being dropped if you’ve ever poured oil faster than the funnel can drain. One thing this analogy lacks, however, is depicting the burstiness or choppiness of the policed traffic.

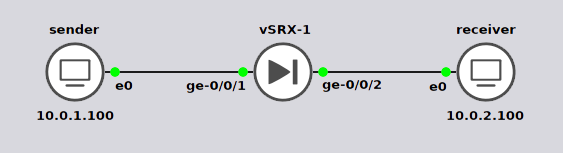

Let’s run a few tests with different burst sizes to see how it impacts the traffic. For this test, I am using a Juniper SRX to do the rate limiting and two virtual machines running linux for the endpoints.

I am running iperf using udp so I can have a constant stream of data and not have acknowledgments in the way when analyzing the data. I use a length or buffer size of 1472 to avoid fragmentation and specified a bandwidth of 100mbps.

Receiver 10.0.2.100

iperf3 -s

Sender 10.0.1.100

iperf3 -c 10.0.2.100 -u -b 100M -l 1472

To capture the traffic on the receiver, I use tcpdump with a snap length of 42 bytes. 14 for ethernet, 20 for IP and 8 for udp headers.

sudo tcpdump -i ens3 -w 1m_burst.pcap -s 42 udp

The following is configured on the firewall. I am matching on udp so that the tcp traffic that iperf uses to setup the session doesn’t count towards the limiter.

[edit]

root@vSRX-1# show firewall

family inet {

filter rate-limit {

term udp {

from {

packet-length 1500;

protocol udp;

}

then policer 50m;

}

term all {

then accept;

}

}

}

policer 50m {

if-exceeding {

bandwidth-limit 50m;

burst-size-limit 1m;

}

then discard;

}

[edit]

root@vSRX-1# show interfaces

ge-0/0/1 {

unit 0 {

family inet {

address 10.0.1.1/24;

}

}

}

ge-0/0/2 {

unit 0 {

family inet {

filter {

output rate-limit;

}

address 10.0.2.1/24;

}

}

}

Got some pretty interesting results.

Test #1 15kB burst size.

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.07 sec 8.12 MBytes 6.77 Mbits/sec 0.006 ms 78358/84141 (93%) receiver

Test #2 150kB burst size.

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.07 sec 20.7 MBytes 17.2 Mbits/sec 0.007 ms 69392/84141 (82%) receiver

Test #3 600kB burst size.

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.07 sec 58.1 MBytes 48.4 Mbits/sec 0.021 ms 42777/84168 (51%) receiver

Test #4 1mB burst size.

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.07 sec 58.5 MBytes 48.7 Mbits/sec 0.002 ms 42502/84150 (51%) receiver

Now, let's write a quick script to count sequential udp packets in the pcap files from the above tests. The reason I chose to avoid fragmentation was so each packet on the wire would have a sequential ID in the IP header. Our script will go through each packet and compare the ID with the previous packet. Once a packet is dropped, there will be a corresponding absence in the capture.

Running the pcap files through the script will get the following output.